We have an online archive, currently only available in-house, of all printed issues of the Financial Times newspaper, from the first issue in 1888 through to 2010. Each page of each issue has been photographed, divided into distinct articles, and each article has been processed with OCR (Optical Character Recognition) to extract the source text from the image.

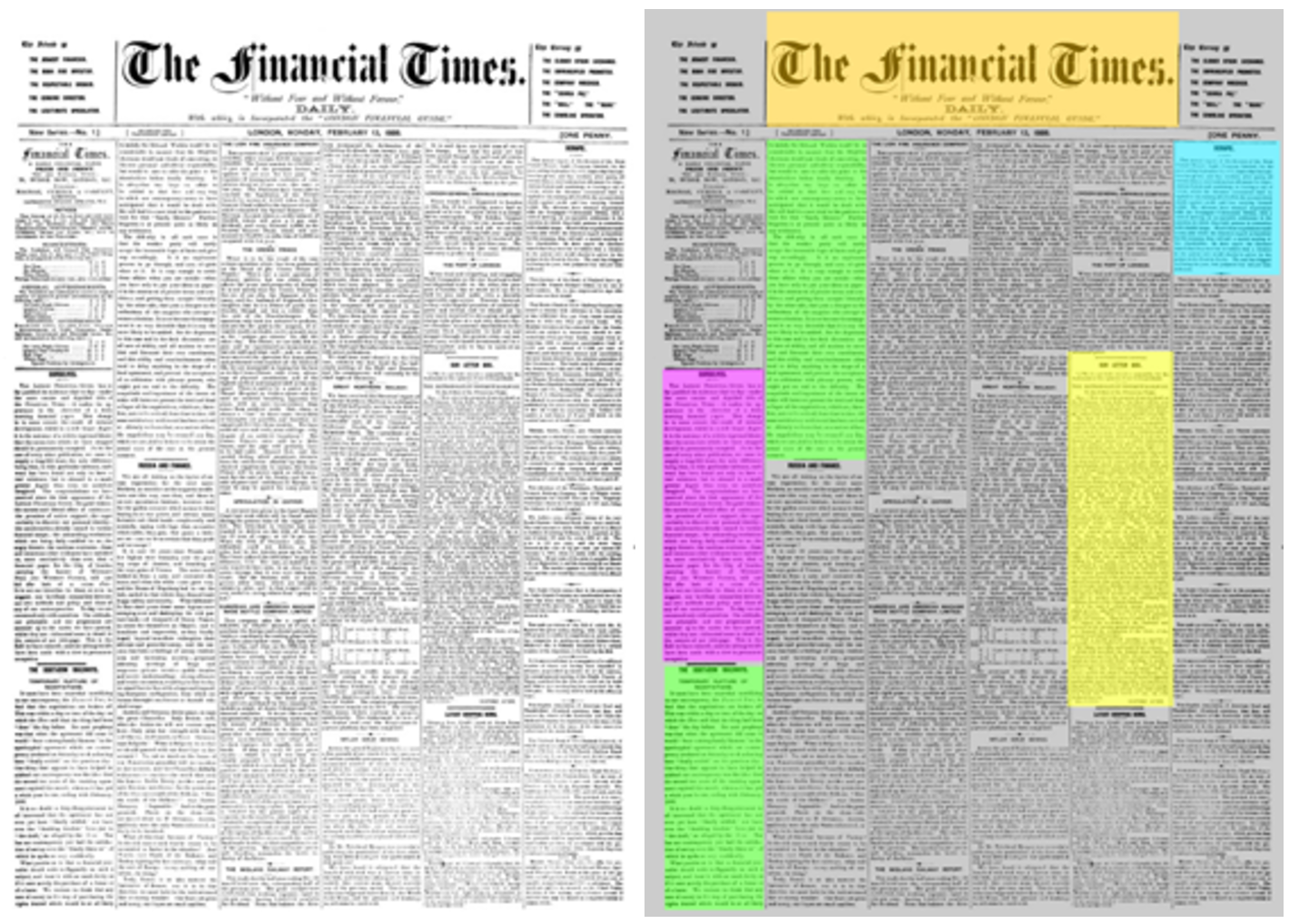

The archive weighs in at approximately 2 TB of JPGs (one for each page of each issue) and XML (one monster file per issue, containing the positional data and titles of each article, and the text fragments identified within each article).

As we consider how to integrate the content of our archive into the main site, as others have done, one significant step is to improve the OCR: the extracted text varies from almost perfect transcription to random noise.

We used open-source software, Tesseract, with its default settings, to achieve a very gratifying improvement in OCR quality.

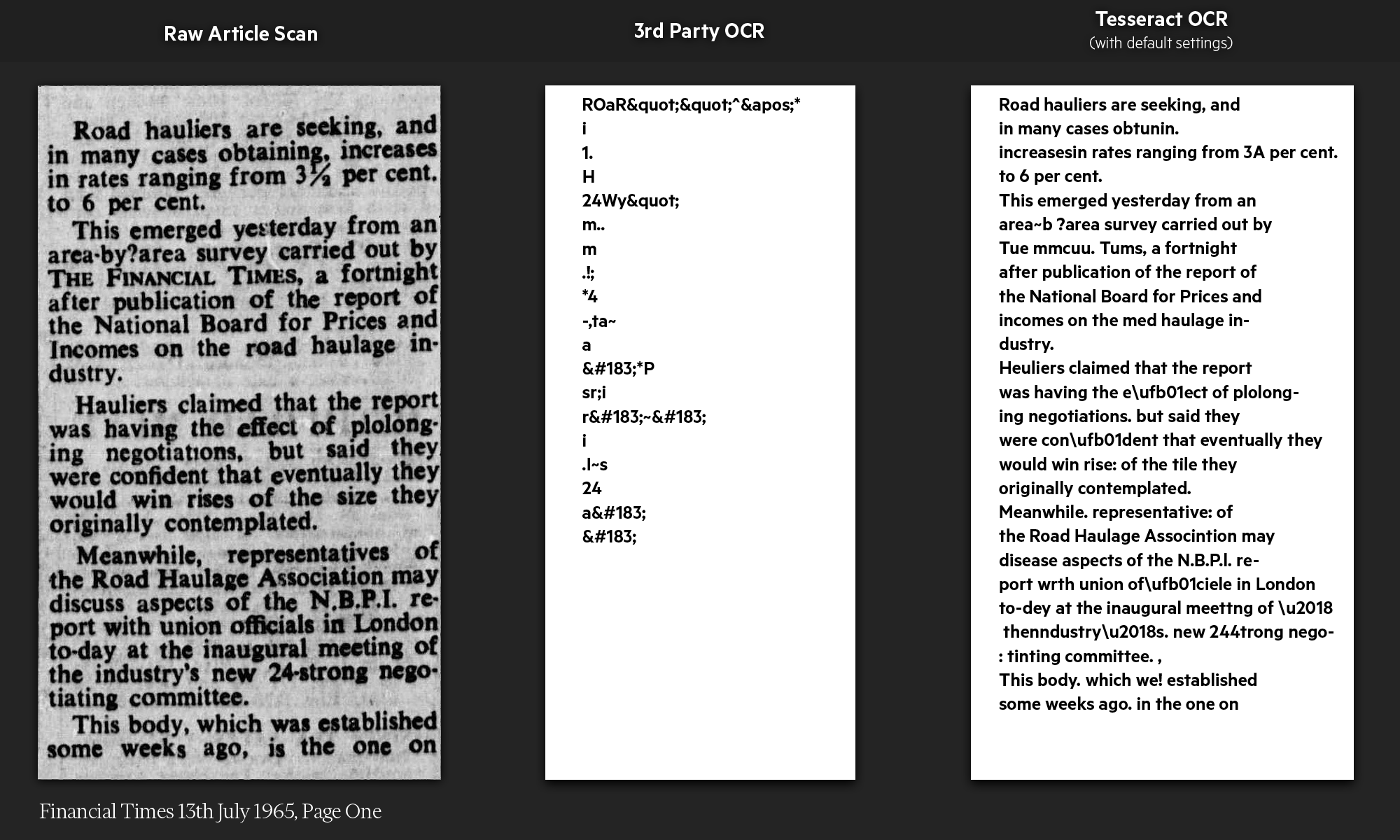

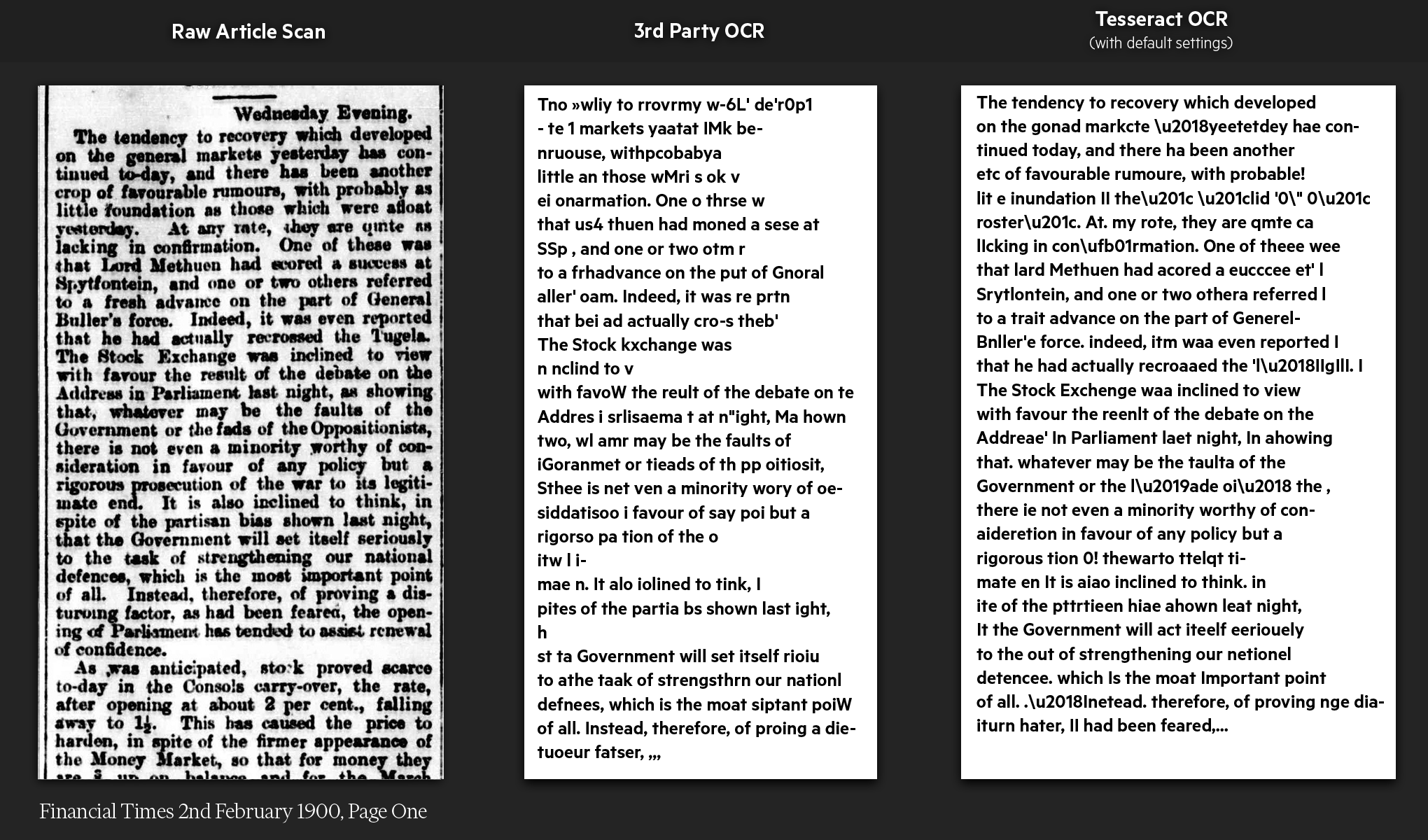

On the left, we have an article which has been cropped from its parent page according to the coordinates stored in the XML. In the middle, we have the original OCR text that we received from the 3rd party. And on the right we have the results of a default OCR scan from Tesseract. It’s not perfect, but it’s rather good for an off-the-shelf piece of kit. And what’s even more impressive is that Tesseract correctly identified a 55 year old spelling error!

We continued with Tesseract to make further OCR improvements, described in a later post, but the remainder of this post describes how we used the out-of-the-box version to make the first big improvement. These posts are based on notes from a presentation given at Barcamp Southampton.

The problem: inconsistent OCR quality

The problem we have here, is that the archived page image quality has changed over time. The settings that create the ideal circumstance for OCR aren’t constant across the entirety of our dataset. This is unfortunate, but the 3rd party company we engaged was hardly going to manually dial in the OCR settings for each and every one of the individual issues.

One thing to note is that although the general quality of the page images varies across the century, each decade is largely consistent within itself. The clarity and level of detail in the images from a January 1960 edition is roughly equivalent to the images from a December 1969 edition. That said, the images are quite noisy, often misaligned, sometimes with movement-induced discontinuities across the middle of a page. However, the bigger problem isn’t so much “Can we scan the entire page or entire issue in one pass” but more “Can we scan decades of issues without too much configuration and with a reasonable result. Also we’ve changed fonts across the decades! Oh, the paper, ink, and printing machinery also varies.”

The opportunity: good positional data and Tesseract

The initial OCR content was not particularly high quality, BUT the data in the XML files describing the location of that content was both comprehensive and accurate. This meant that we could use the XML files to re-run OCR on the images on an article-by-article basis.

Plus, there was Tesseract, a piece of open-source software that’s really good at one thing – OCR scanning.

We picked a few articles at random from across the decades and ran Tesseract with its default settings, and got the spectacular results above.

To be slightly fairer to the original provider of our OCR, here is another, more typical improvement in OCR

The improvement brought about by using Tesseract is sufficient to justify re-doing the OCR across the entire archive but, even so, there are still sections of the archive which remain illegible (for our OCR).

Gotchas

There are several challenges we noted but parked for the duration of this project

- Combining articles which are split across columns or pages

- Identifying adverts (rather than journalistic copy)

- Handling tabular text